|

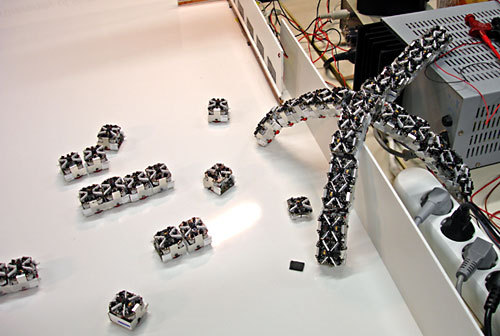

| Swarm Robotics |

So, in the previous lecture, we saw that

eigenvalues play a fundamentally important

role when you want to understand stability

properties of linear systems. We saw that

if all eigenvalues have strictly negative

real part, the systems are asymptotically

stable. If one eigenvalue is positive,

then we have positive real part. Then,

we're screwed and the system blows up.

Now, today, I'm going to use some of these

ideas to solve a fundamental problem in

swarm robotics. And this is known as the

Rendezvous Problem. And, in fact, what it

is, is you have a collection of mobile

robots that can only measure the relative

displacements of their neighbors. Meaning,

they don't know where they are, but they

know where they are relative to the

neighbors. So here, here we have agent i,

and it can measure xi minus xj, which is

actually this vector. So, it knows where

agent j is relative to it. And this is

typically what you get when you have an

censor skirt, right? Because, you can see

agents that are within a certain distance

od you. And, as we saw, you know, where

they are relative to you. If you don't

have global positioning information, you

don't know where you are so there's no way

you're going to know, globally, where this

thing is, but you know where your

neighbors are locally. And the Rendezvous

Problem is the problem where having all

the agents meet at the same position. You

don't want to specify in advance where

they going to meet because since they

don't know where they are, they don't know

where they going to meet. They can say,

oh, we're going to meet in, at the origin.

But I don't know where the origin is. Is

it in, in Atlanta, Georgia? Or is it in

Belgium, or is it India? What do I know,

right? So, the point is, we're going to

meet somewhere, but we don't know where.

Okay.

So, we have actually solved this problem

before. We sold it for the 2 robot case,

where we had 2 robots. and we could

control the velocities right away. What we

did is we simply had the agents aim

towards each other. So, u1 was x2 minus

x1. And u2 was x1 minus x2. This simply

means that they're aiming towards each

other. Well, if we do that, we can

actually write down the following the

following system. This is the closed loop

system now, where we have picked that

controller. So, x dot is this matrix times

x. Okay, let's see if this works now.

Well, how do we do that? Well, we check

the eigenvalues of the A matrix to see

what's going on. Alright. Here is my A

matrix, type eig in MATLAB or whatever

software you're using, and you get that

lambda 1 is 0, lambda 2 is negative 2.

Okay, this is a little annoying, right?

Because we said asymptotic stability means

that both eigenvalues need negative real

part, this doesn't have that. But

asymptotically stable, so asymptotic

stability also means that the state goes

to the origin and, like we said, we don't

know where the origin is, so why should we

expect that? We should not expect it. We

also know that one positive eigenvalue or

eigenvalue with positive real part makes a

system go unstable. We don't have that

either. In fact, what we have is this

in-between case that we called critical

stability. We have one 0 eigenvalue and

the remaining eigenvalues have negative

real part. So, this is critically stable.

And, in fact, here is a fact that I'm not

going to show but this is a very useful

fact. So, that if you have one eigenvalue

be zero and all the others have negative

real part, then in, in a certain sense,

you're acting like asymptotic stability.

Meaning, you go, in this case, not to zero

but you go into a special space called the

null space of A. And the null space of A

is given by the set of all x, so such that

when you multiply A by x, you get zero

out. That's where your going to end up.

So, your going to end up inside this thing

called the null space of A, in this case,

because you have one 0 eigenvalue and all

others having strictly negative real part.

And if you type null(A) in MATLAB, you

find that the null space for this

particular A is given by x is equal to

alpha, alpha where alpha is any real

number and why is that? Well, if I take

negative 1, 1, 1, negative 1, this is my A

matrix, and I multiply this by alpha,

alpha, what do I get? Well, ll' get minus

alpha plus alpha here, and then I get plus

alpha minus alpha there, which is clearly

equal to 0. So, this is the null space.

Okay, what does this mean for me? Well, it

means that x is, x1 is going to go to

alpha and x2 is going to go to alpha. x1

goes to alpha, x2 goes to alpha, which

means that x1 minus x2 goes to 0 because

they go to the same thing. Which means,

that we have, ta-da, achieved rendevous.

They end up on top of each other. In fact,

they end, end up at alpha. We don't know

what alpha is but We know that they end up

there. Okay.

Now, if you have more than two agents, we

simply do the same thing. In this case, we

aim towards what's called the centroid of

the neighbors. And, in fact, if we write

this down, we write down the same thing

for more than one agent, we get that x dot

i, before it was just xj minus xi, there

were 2 agents. Now, I'm summing over all

of agents i's neighbors. That is doing the

same thing if you have more than one

agent. And, in fact, if you do that and

you stack all the x's together then you

can write this as x. is negative lx. And

this is just some bookkeeping. And the

interesting thing here, here is that l it,

it's known as the Laplacian of the

underlying graph. Meaning, who can talk to

whom. that's not so important. The

important thing though is that we know a

lot about this matrix L and, again, and

it's called the graph Laplacian. And the

fact is that if the undergrinding,

underlying graph is connected, then L has

one 0 eigenvalue, and the rest of the

eigenvalues are positive. But look here,

we have negative L here, which means that

negative L is one 0 eigenvalue and the

rest of the eigenvalues are negative.

That means that this system here, the

general multiagent system here is actually

critically stable. And we know that it

goes int o the null space of L. And it

turns out, and this is a fact from

something called algebraic graph theory.

We don't need to worry too much about it.

We just know that clever graph

theoreticians have figured out that the

null space to L, if the graph is connected

which means that there is some path with,

through this network between any two

agents is given by not alpha, alpha but

alpha, alpha, alpha, alpha, alpha, a bunch

of alphas. So, just like before, if I have

this thing and, in fact, it doesn't have

to be scalar agents, what I do have is

that all the agents go to the same alpha

or in other words, the difference between

the agents will actually disappear. And

when we did this, we design a controller.

We actually designed this thing here. And

this thing is so useful that it actually

has its own name. It's known as the

consensus equation because it makes agents

agree. In this case, they were agreeing on

position. But this equation actually will

solve the rendezvous problem because of

the fact that the corresponding system

matrix you get is negative L at the right

eigenvalues which means that the system is

critically stable so we can solve

rendezvous in the multirobot case. And

again, you've seen this video. Now, you

know what it was I was running to generate

this video. In fact, you can go beyond

rendezvous So, here is actually a course

that I'm teaching at Georgia Tech, where

you want to do a bunch of different

things. And again, all I'm doing is really

the rendezvous equation with a bunch of

weights on top of it. And we're going to

learn how to do this. I just want to show

you this video, because I think it's quite

spectacular. You have robots that have to

navigate an environment. They're running

these, basically the conses equation and

they have to avoid slamming into

obstacles, so I should point out that this

was the final project, project in this

course, it's called network control

systems. And I just wanted to show you

that you can take this very simple

algorithm that we just dev eloped, make

some minor tweaks to it, which we're going

to learn how to do, to solve rather

complicated, multiagent robotics problems.

So here, the robots have to split into

different subgroups and avoid things, they

have to get information, they have to

discover missing agents and so forth. And

we will learn how to do all of that in

this course. Now, having said that, talk

is cheap, right? And simulation is maybe

not cheap, but let's do it for real. In

this case, we're going to have two Khepera

mobile robots, and what we're going to do

is we're going to use the same PID

go-to-go controllers and we're going to

let the consensus equation flop down

intermediary goal points. And what we're

going to do is we're going to keep track

of our position using odometry, meaning

our, our real encoders. And what the

robots are going to do, is they're

actually going to tell each other where

they are rather than sense where they are

because we haven't talked yet about how

the design sensing links. So, what we're

doing is we're faking the sensing and

telling, they're telling each other where

they are. And they're using a PID

regulator to go in the direction that the

consensus equation is telling them to go

in. And now, let's switch to the actual

robots running and solving the rendezvous

problem. So, now that we have designed a

closed looped controller for achieving

rendezvous we're going to deploy it on our

old friends, to Khepera mobile robots. so,

what we will see now are these two robots

actually executing this algorithm. and we

will be using the same go to goal

controller as we designed earlier to

achieve this. And, as always, I have J P..

De la Croix here to conduct the affairs

and practically, we're going to see two

robots crashing into each other which is

exciting in its own right. But

intellectually, what we're going to see,

is the state of the system asymptotically

driving into the null space of our new A

matrix. And the reason for that is, as

we've seen this matrix has 0, 0

eigenvalue, which means that the system is

critically stable and the states approach

the null space. So, J.P., without further

ado, let's see a robotic crash. So, we see

they're immediately turning towards each

other and . Pretty exciting stuff, if I

may say so myself.

EmoticonEmoticon